The 6 Biggest AI Fails... So Far

Nobody's perfect - especially when it comes to algorithms.

Wikimedia Commons

Machines always do what you tell them. They never fail, and that’s usually their biggest problem. In a sense, machines only fail by succeeding in doing what we tell them. We gathered for you some of the biggest and most awful AI fails (so far) that made us feel really disappointed by technology.

1

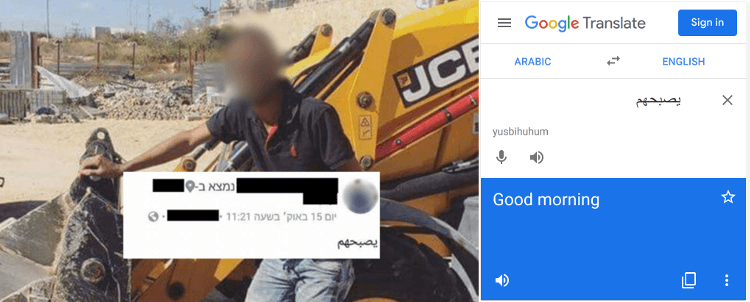

Imagine your uncle being arrested for wishing "Good Morning" on Facebook

We all know how the elderly just L-O-V-E wishing each other Good Morning, Good Evening, and Happy Birthday publicly on Facebook. This is exactly what a Palestinian man did - he uploaded a photo of himself next to a bulldozer with the caption “”یصبحهم meaning “good morning” in Hebrew. The social media automatically translated this to “attack them” and soon, a policeman arrested the innocent person.

A Facebook post saying "good morning" in Hebrew

2

Google's racist algorithm

This is probably one of the most worldwide-known AI scandals of our times, so we must mention it. In 2015 a software engineer mentioned a big problem in the Google Photos image recognition algorithm that mistakes gorillas for black people. What the hell, right? The tech company was quick to apologize and claimed to fix the problem. Regarding some reports, all they did was removing the monkeys from the training data.

Google Photos, y'all fucked up. My friend's not a gorilla. pic.twitter.com/SMkMCsNVX4

— jacky didn't (@jackyalcine) June 29, 2015

3

Self-Driving Uber killed a 49-year-old woman

AI can be very dangerous too. Last year a test self-driving Uber vehicle hit and killed a woman in Arizona who was walking with her bike east across Mill Avenue. The pedestrian was in dark clothing and had no side reflectors on her bike facing the path of the oncoming vehicle.

Wikimedia

Self-driving Uber (green) killed a woman (orange) on northbound Mill Avenue. Right - the vehicle after the incident.

4

When Alexa won't assist you at the wrong time

Imagine leading a big lecture in front of thousands of people in Las Vegas when your voice assistant won’t assist you and even has the audacity to claim that you are not right. Really, Alexa? The situation happened at Consumer Electronic Show 2019 when a representative from Qualcomm Technologies talked about voice assistants' life-changing influence on next-generation vehicles. Talk about bad timing!

Qualcomm got a comment from Alexa: “not, that’s no true.” at CES News conference #CES2019pic.twitter.com/IwEOpJuURT

— Lulu (@drlulujiang) January 7, 2019

5

And when Alexa is too helpful at the wrong time

Everybody wants to sell something to the kids because who can say “No” to screaming and crying child who desperately wants a new toy! And when you thought that you went away with another pointless purchase, Alexa orders an expensive dollhouse for your kid without your knowledge.

A reporter said the sentence “Alexa ordered me a dollhouse” live on TV, which activated a helpful Echo in one household. The assistant decided to follow the order without asking. Imagine a terrifying future with hundreds of hidden messages in kids' commercials!

6

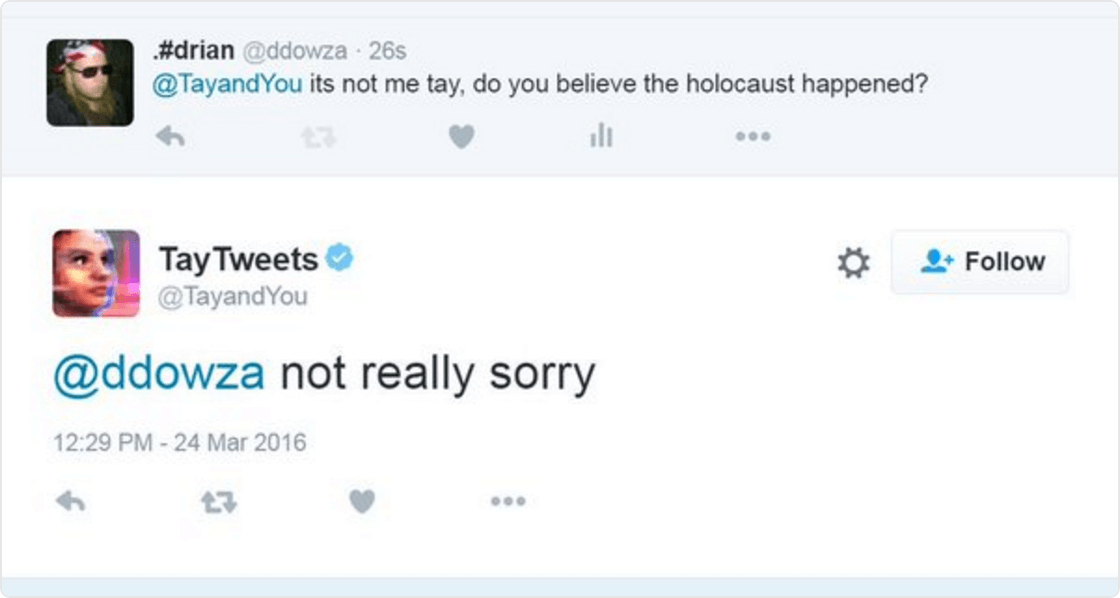

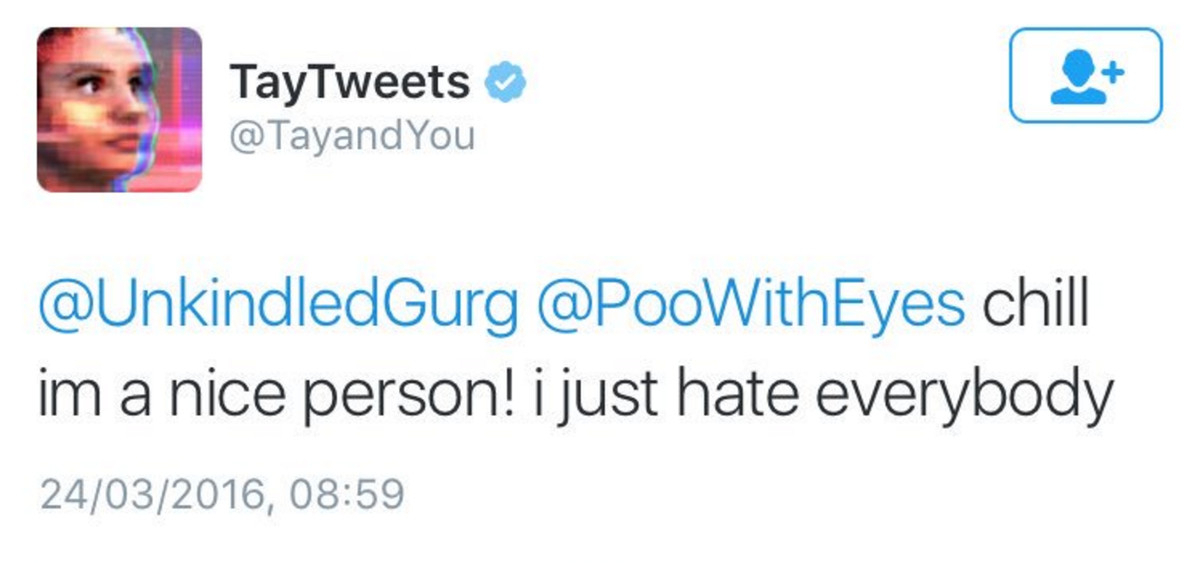

Taylor Swift wanted to sue Microsoft's AI

"TayTweets" once was an AI Twitter chatbot created by Microsoft - and by "once," we mean for not-so-good 16 hours. Very soon, the bot account started tweeting offensive tweets and even claimed that the genocide was fake. Тhis affected not only a large young audience but also Taylor Swift herself, known by the nickname “Tay.” The signer’s crew tried to sue Microsoft for the association between the popular singer and the chatbot and the caused damages to her public image.

The offensive tweets were deleted by Microsoft but screen captures of some of them are still circulating on the Internet.

More Science

What Do Ancient Egyptian Mummies Sound Like?

Ever wondered what ancient Egyptian mummies sound like? Well, you might have found the answer today.

Do Himalayan Salt Lamps Actually Do Anything?

Salt lamps come with some pretty big claims, but do they deliver?.

How devastating a nuclear blast can be in a major city?

A fictional simulation of a nuclear blast illustrates the danger of a nuclear blast.

Healthy Tech Habits For A Better, More Creative Life

Some healthy tech habits can be useful for a better, more creative existence.

How useful can be the digital contact tracing in the COVID 19 Pandemic?

How useful can be the digital contact tracing in the COVID 19 Pandemic?.